This blog shows you how to set up DeepSeek-R1, a powerful local reasoning model, using Ollama and Open WebUI interface simillar to ChatGPT.

First, set up a dedicated virtual environment using anaconda:

conda create -n webui python=3.11 -y && conda activate webui

Ollama is a lightweight model server that manages and runs AI models locally. Choose your operating system below for installation instructions:

curl -fsSL https://ollama.com/install.sh | sh

# Verify installation

ollama --version

Set up the web interface using open-webui:

pip install open-webui

Choose your preferred model size:

# DeepSeek-R1-Distill-Qwen-1.5B

ollama run deepseek-r1:1.5b

You can install other DeepSeek models using Ollama.

# DeepSeek-R1

ollama run deepseek-r1:671b

# DeepSeek-R1-Distill-Qwen-7B

ollama run deepseek-r1:7b

# DeepSeek-R1-Distill-Llama-8B

ollama run deepseek-r1:8b

# DeepSeek-R1-Distill-Qwen-14B

ollama run deepseek-r1:14b

# DeepSeek-R1-Distill-Qwen-32B

ollama run deepseek-r1:32b

# DeepSeek-R1-Distill-Llama-70B

ollama run deepseek-r1:70b

Start the WebUI server:

open-webui serve

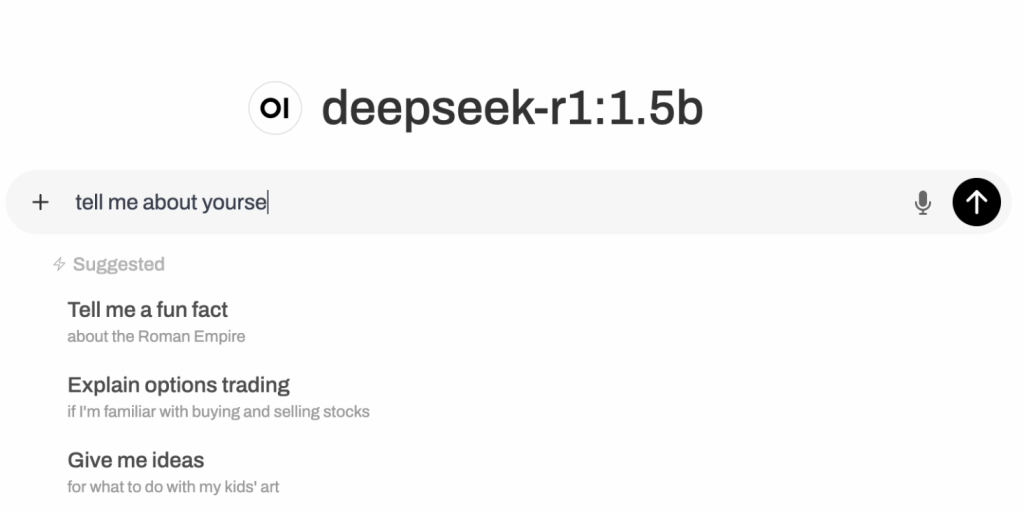

http://localhost:8080After installation, access the WebUI through your browser and start interacting with DeepSeek-R1.

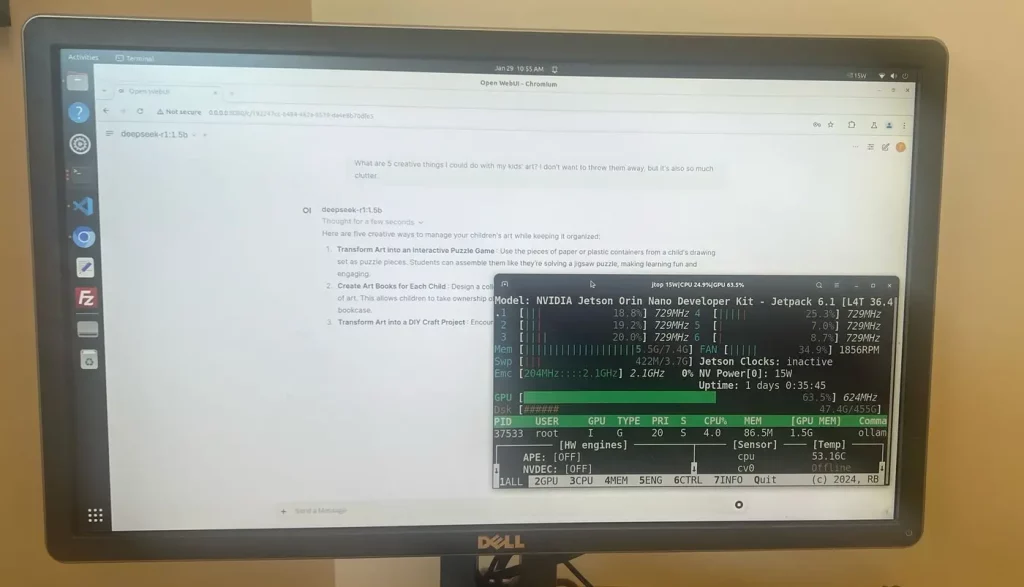

DeepSeek-R1 works on edge devices like the Nvidia Jetson Nano, using only 8 GB of RAM. I tested it with 1.5b parameters, and it runs smoothly.

© 2025 koraiio.